Common Issues & Error Messages

A. Registration Validations

-

Required field missing

- Missing required field cutover_required

- "Missing field engine, please provide one of ['mysql', 'mssql', 'postgres']"

- "Missing both `db_details` and `source_db_names`, "please provide at least one of them."

-

Missing required field scalearc_endpoint.

-

Wrong input values provided

- "Invalid value engine=None, should be one of ['mysql', 'mssql', 'postgres']"

- 'Invalid scalearc_cutover_duration=x, Underlying error AssertionError: scalearc_cutover_duration=x should be >= 10 minutes'

- Invalid scalearc_cutover_lag_threshold=x, Underlying error AssertionError: scalearc_cutover_lag_threshold=x should be > zero

-

Invalid scalearc_cutover_start_time=x

- Should be in hh:mm format.

- Hour hh in scalearc_cutover_start_time should be from 0 to 23, given hour=x

- Minute mm in scalearc_cutover_start_time should be from 0 to 59

- Value for source_port should be an integer

- Value for target_port should be an integer

-

Expected {password} to be already decrypted, looks encrypted, starting with …

Provide the decrypted password for source_password, target_password, scalearc_password - Source and target username should be the same in a ScaleArc cluster, as cutover is required.

-

Source and target password should be the same in a ScaleArc cluster, as cutover is required.

Follow Step 6 in the Guidelines for preparing databases for migration for a fix for (h) and (i)

-

ScaleArc cluster validations

-

Could not fetch cluster details for cluster = x

Create a cluster and ensure it is up and running before initiating a migration. -

Expected cluster with cluster_id=X to be of type ..., found ... . Please check the cluster once.

You are running a migration for example MySQL database and you have provided Postgres cluster details.

Verify that the cluster details provided are correct as per the migration database. - Expected cluster with {cluster_id=} to have exactly two servers added.

One for the source database and one for the target database. - Expected one of the servers to be in "Read + Write" mode, found none.

- Expected one of the servers to be in "Standby, No Traffic" mode, found none.

- Could not find the source server with "Read + Write" mode.

- Source server host in {cluster_id=} does not match the expected value. Expected X to be in "Read + Write", found Y to be in "Read + Write". Please check the servers are in the correct roles once more.

- Source server port in {cluster_id=} does not match the expected value. Expected X to be in "Read + Write", found Y to be in "Read + Write". Please check the servers are in the correct roles.

- Could not find the target server with "Standby, No Traffic" mode.

- Target server host in {cluster_id=} does not match the expected value.

Expected X to be in "Standby, No Traffic", found Y to be in "Standby, No Traffic". Please check the servers are in the correct roles. -

Target server port in {cluster_id=} does not match the expected value.

Expected X to be in "Standby, No Traffic", found Y to be in "Standby, No Traffic". Please check the servers are in the correct roles.

Follow Step 4 in the Guidelines for preparing databases for migration for errors from (f) to (k). -

Source and target host and port should be different. It is dangerous to use the same source and target host:port as DMS would wipe/drop your target database.

You have provided the same host and port for the source and target database. This is dangerous because you are trying to clone the source database at the same host and port.

Please provide the different source and target host, port. -

Target {db_name=} is not empty. It contains X tables. Delete ensure that you provide a fresh empty target database for migration, else data in your target database will be wiped/dropped by DMS.

The target database should be empty to initiate a migration. Ensure this before starting the migration.

-

Could not fetch cluster details for cluster = x

-

Other registration validations

- Could not log in to the ScaleArc endpoint at X, please check the ScaleArc access.

-

"Target database does not exist target_uri=XX, and could not even create it yourself"

Insight: Either the given user does not have permission to create the database, or the create database query failed.

Fix: Create the target database before initiating a migration or provide users with sufficient permissions to create the database. -

Failed to connect to source database {connection_uri}

Failed to connect to target database {connection_uri}

The above message can occur due to multiple reasons. Below are the troubleshooting steps for the same:- Verify that the source/target host, port, username, password are correctly provided.

- Set up a VPC peering with this AWS account(VPC details:

CN-Development VPC vpc-0156cd9a85b4b3fac).

This is required as we need to be able to access source and target databases from our DMS replication service. -

Allow connections from Devflows to your databases by creating a VPC peering between your account where the source and target databases are present to the below Devflows account:

AWS Account ID : 346945241475

VPC ID: vpc-027288988b1ac3149

The fix implemented in the below example is specific to the instances mentioned. In your case, it will be different as per your network settings:-

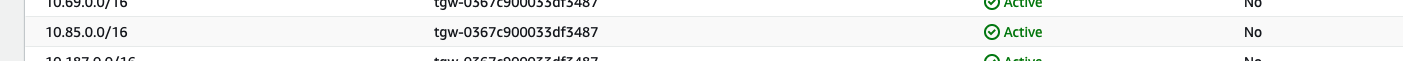

Add route on tgw in your AWS account

-

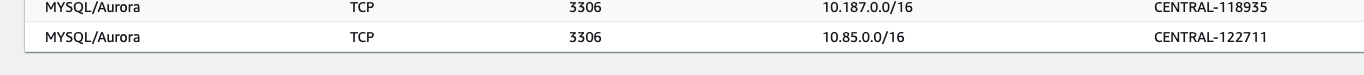

Modify Security group to allow CN-Production Network

This is to make sure the security group of the DB instance is not blocking the Devflows VPC CIDR. Add below two CIDRs in the security group of the DB instance.

-

Add route on tgw in your AWS account

B. DMS Errors

-

Last Error Replication task out of memory. Stop Reason FATAL_ERROR Error Level FATAL

(OR)

Last Error Not enough memory to allocate.

-

- This happens when the Replication instance used by Scalearc runs out of memory during the migration.

- This is because DMS doesn't suggest any specific instance type for different kinds of DB sizes or structures or LOB sizes that we specify.

-

ScaleArc Scales the instance type based on the “source_db_size” input provided as follows ( or sum of source_db_sizes in case of multi migration):

source_db_size

Replication instance type

<= 1

DMS_T3_MEDIUM

<= 4

DMS_T3_LARGE

<= 20

DMS_R4_LARGE

<= 50

DMS_R4_XLARGE

<= 100

DMS_R4_2XLARGE

<= 200

DMS_R5_4XLARGE

<= 400

DMS_R5_12XLARGE

> 750

DMS_R5_16XLARGE

- You can scale your Replication instance type by modifying your source_db_size according to the table given above.

-

An alternate way:

- There is a high chance of occurrence of this issue if you use Limited LOB mode with high LOB size.

- Thus, you can tackle this by switching your mode to Full LOB mode with a less than (1/10)th of the LOB size used in Limited LOB mode.

- This mode of operation can be slower but uses much less memory as compared to the previous mode.

- Thus, preventing the replication instance from going out of memory.

-

In some cases, you might specify the DATETIME and TIMESTAMP data types with a "zero" value (that is, 0000-00-00). If so, make sure that the target database in the replication task supports "zero" values for the DATETIME and TIMESTAMP data types. Otherwise, these values are recorded as null on the target.

-

Make sure you set the following MySQL config variables in the target:

sql_mode = 'ONLY_FULL_GROUP_BY,STRICT_TRANS_TABLES,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'; - The above variables can be set by editing the `/etc/my.cnf` (or the location of the conf file in your server) and restarting the server.

-

Another way of setting them would be to set global variables with the following syntax:

SET GLOBAL sql_mode = 'ONLY_FULL_GROUP_BY,STRICT_TRANS_TABLES,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION';

[Important note: Variables set using ‘SET GLOBAL’ will be reset during server restart]

-

Make sure you set the following MySQL config variables in the target:

- Last Error Fatal error has occurred Task error notification received from subtask 2, thread 1 [reptask/replicationtask.c:2822] [1022505] Failed (retcode -1) to execute statement; RetCode: SQL_ERROR SqlState: 42000 NativeError: 1105 Message: [Microsoft][ODBC Driver 17 for SQL Server][SQL Server]Could not allocate space for object 'dbo.T1601_INV_LINE'.'PK_T1601' in database 'DTKE_CDKPROD' because the 'PRIMARY' filegroup is full. Create disk space by deleting unneeded files, dropping objects in the filegroup, adding additional files to the filegroup, or setting autogrowth on for existing files in the filegroup. Line: 1 Column: -1; Error executing data handler; Stream component failed at subtask 2, component st_2_UGHSPZMCHTABAKV2CIMS6ZV7A7IWLWIGHNTLJOI; Stream component 'st_2_UGHSPZMCHTABAKV2CIMS6ZV7A7IWLWIGHNTLJOI' terminated [reptask/replicationtask.c:2829] [1022505] Stop Reason FATAL_ERROR Error Level FATAL

C. Other Errors

-

MYSQL DB connection lost in between migration?

(pymysql.err.OperationalError) (2006, MySQL server has gone away (ConnectionResetError(104, 'Connection reset by peer')))-

Make sure you set the following MySQL config variables in both source and target DB:

max_allowed_packet=536870912;

interactive_timeout=86400;

wait_timeout=86400;

net_read_timeout = 3000;

net_write_timeout = 3000;

connect_timeout = 900;

innodb_lock_wait_timeout = 1800; - The above variables can be set by editing the `/etc/my.cnf` (or the location of the conf file in your server) and restarting the server.

-

Another way of setting them would be to set global variables with the following syntax:

SET GLOBAL <variable_name> = <value>

[Important note: Variables set using ‘SET GLOBAL’ will be reset during server restart]

-

Make sure you set the following MySQL config variables in both source and target DB:

Troubleshooting Guidelines

- Head over to the DMS console and monitor the tasks in more detail: https://console.aws.amazon.com/dms/v2/home?region=us-east-1#tasks

- Head over to the Devflows console to check traces in AWS X-ray and Kinaba and Cloudwatch: https://devflows-ui-devflows-prod.devhub.k8.devfactory.com/products/142/flows

- Please get clarification from the team, if something is ambiguous.

- Please get support from the DB Migration team in case of exceptions.

- Use the validation reports endpoint to retrieve detailed validation reports for a task_id. Contact the DB Migration team from Devflows for the URL and API key to use to download the validation report.